Debugging AI Conversations for Admins

Between 2020 and 2023, I worked on a chatbot/virtual assistant platform that provided knowledge and processes to enterprise organizations. While AI/NLP advancements enabled the provision of content, custom-built conversations were still necessary. However, the process was complex and discouraging for some admin customers, so we aimed to create a simpler, sustainable way for everyone to build conversations. One of the crucial elements to conversation design was the ability to debug and reverse engineer conversations in a way that promoted understanding and learnability.

Role

Lead Product Designer

Functions

Research, Interaction, Visual Design, Prototyping, Testing

Time

3 weeks

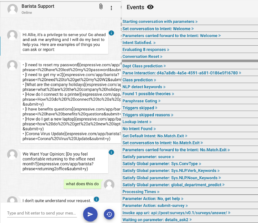

Reviewing the existing implementation

I began exploration by looking at the current tool and trying to understand it’s elements

Though the virtual assistant presented a cohesive interface for users, its underlying architecture involved a hybrid approach, utilizing both linguistic rules-based configuration and large language models like ChatGPT.

Custom conversations had to be created and tested in a proprietary content authoring platform.

Discovery

What I saw

- Far too many events, and within those events way too much data to comprehend (one even had 5,400 lines of JSON o.O)

- No clear visual hierarchy to provide guidance to what was important

- Lack of connection/functionality relating to the conversational content

- No way to edit or find where to edit/view configurations

- Standard UI usability was ignored completely. No affordances on accordions, clicking certain elements produced unexpected results

- No searching or filtering, difficulty in finding anything specific

My Experience

- I felt overwhelmed and confused

- I had difficulty determining what’s important and what’s not

- I couldn’t find information that matches my intent

- I was unsure if all of the information is necessary

- I had no idea where to begin

- I was having trouble following the flow of the conversation

- There were icons, modals and functionality that seemed to be broken

- I needed countless hours of training and/or experience to even begin to understand how to use this tool effectively

Validating my hypotheses

I sent out a survey to internal conversation analysts and customer success that informed my design decisions.

Q: Which events do you commonly utilize to investigate?

Results

Out of the 42 event log types the most commonly used information could be categorized easily. When looking at the data information pertaining to the following categories was used on a more frequent basis:

- Intent information – Intents were the core building blocks of the conversation designer.

- Conditions/Flow decision points – Conversational responses would be returned based on a number of configured elements

- Parameters – These were flexible variables that could be referenced and utilized to drive different conversation flow

Q: What difficulties do you face when using the current debugger?

Quotes from users

- “There is a lot of information in specific sections that could be collapsed into general ones. Also, the debugger results do not scroll down at the same time as the log results.”

- “It could be more visually friendly. Even with colors, the debugger section is heavy to navigate through the user interactions. It contains every detail from the backend, and it increases the time spent trying to find something specific”

- “Too much information, too many events, very small letters, it’s very overwhelming, I’ve learned to only look at what really works for me and ignore the rest, which is also bad because I don’t even know what it means and I don’t think anyone else does”

- “There is more information than the things I need to debug. This also could be overwhelming for the customers when we have the admin training.”

Understanding who we are building this for

The debugger was a specialized tool that required training to be utilized effectively. There were two customer personas that had a vested interest in it.

CIO

This persona was usually the one to champion the need and value of introducing the product.

Motivations:

- Save money by reducing the need for lower-level help desk staff & automating basic tasks

- Speed up time to resolution for issues

- Improve their employees perception of their department

Responsibilities:

- Prove ROI for investment in purchasing product

- Review/support admin's efforts

Help Desk Admin

This was the user that was assigned to manage and maintain the product based on company needs.

Motivations:

- Drive adoption and meet the goals of the CIO

- Reduce use of internal resources

- Personal development

- Get promoted

Responsibilities:

- Oversee/support onboarding & implementation

- Monitor adoption/data against goals

Defining measurable goals

I established some metrics that would let us know if we were on the right path.

60%

Reduction in tickets related to content editing

20%

Increase in customer created conversations

70%

Reduction in debugger training time

Exploring possible solutions

My main focus was to make debugging logs more consumable by removing unnecessary items, grouping common content, and providing a visual hierarchical flow structure.

Brainstorming Concepts

I tried out different concepts to find a better approach to the presentation. I considered the following options:

- Combining the conversational user interface with the debugger log as a stream

- Presenting the debugging information as tabs attached to the content

- Using a workflow-style presentation

It was decided to incorporate the new debugger design into the existing structure due to limited resources and upcoming deadlines.

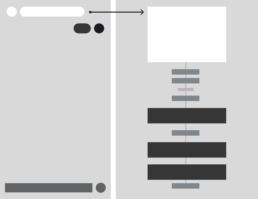

Simplification, Hierarchy & Flow

My main objective was to enhance the readability of the debugger information. This was accomplished through the following methods:

- Addition of intentional white space between elements

- Creation of a sense of flow within the event stream

- Visual treatment of important items

Viewing Information

To enable customers to view and modify the content, I proposed methods for users to drill down into specific event log items.

Connecting the chat to the event log items

To view information related to a specific content intent:

- Click on the button located near the content.

- The content area will be highlighted as selected.

- The relevant intent node will automatically scroll to a predictable position.

- The node will then open, revealing all the details related to the content.

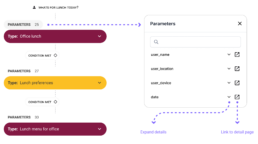

Creating the event log components

In order to make the application more flexible and scalable and allow for easier enhancements based on feedback, I chose to follow a component-based approach for its architecture. My understanding of component-based front-end development enabled me to structure these components in a way that would facilitate a smooth transition to development.

Intent Nodes

Intents form the foundation of a conversation between a user and an AI. When a user enters a message, the AI determines the meaning of the words and matches it with an intent. Hence, almost all visible content and configurations are linked to an intent. Therefore, it is crucial to give higher visual priority to this category of events. To achieve this, I ensured these nodes had a larger size and higher visual contrast.

Flow/Transition Nodes

Understanding the events that trigger the logic and flow of a conversation between intents is crucial. One intent may have a condition that redirects the conversation to another intent based on various factors. It is important to know the reasons behind the switch to another intent and what occurred in between.

Parameters

Parameters are flexible values that can be created and modified by user interaction or other processes. They can be used to provide relevant information or processes to the user, and their evolution can be viewed throughout a conversation. Different intents or conversational AI artifacts can modify these parameters depending on the conversation design.

Connecting the conversation with the event log

We needed to connect the conversation to the events in the log so that users would be able to narrow down on relevant information more quickly.

A redesign of the conversational UI in the debugger was outside of the scope of the project, but I needed to make it easier to understand where the content in the conversation was coming from.

I connected the conversational UI view with the event data by adding icons that allowed the user to navigate to specific items in the event log or content authoring platform.

A debugging experience that was far more consumable

As the user interacted with the conversational UI the event log would update with the log components. The intentional visual treatment of the event log items helped the user understand the configured conversation flow.

The new debugger would allow users to explore and understand the important building blocks of conversations.

Internal Release

An alpha release was made available to internal users so that they could validate design decisions. It was important that the new debugger was used for a period of time so I could discover any problems before releasing it to customer admins.

Feedback

I created a feedback mechanism with the internal users who utilized the tool most frequently.

- “This is so much easier to process, I still wish I could search things, but this is such a huge improvement!”

- “I actually learned how conversation flow works from this. I couldn’t figure it out before.”

- “I can actually see what intents go to what content? This is a gamechanger and it is going to save me so much time.”

- “I used this to debug an issue I was seeing already! It didn’t go all the way, but just what you did cut my time down by like 80%.”

- “This is going to be so much nice to show our customer admins. I don’t think I will have to hold their hand as much now.”

Future Considerations

While I was working on the designs for the first version I knew that there was functionality that was needed/requested that was not going to make the first release. There were also things I suspected needed to be polished and/or improved upon.

- Searching – We needed a search mechanism to be able to easily find various artifacts

- Filtering – A reliable way to visually narrow down conversation flows to specific types of elements

- Parameter tracking – A way for users to select one or more parameters and see when and how it changed at any given point

- Internal/Inline editing – Allow users to edit and then retest directly in the debugger

- Advanced view – Find a way to reintroduce some of the lesser-used, but necessary data we removed

- Customization/Preferences – Basic customization to streamline user’s daily use

- Visual Styling – Compromises were made due to resource constraints

- Animation polishing